Deepfake technology, which uses artificial intelligence to create hyper-realistic but fabricated videos and images, is rapidly advancing and becoming more accessible. This surge in deepfake content is poised to ignite unprecedented legal battles due to the complex challenges it presents in law enforcement, privacy rights, intellectual property, and free speech.

At its core, deepfake technology allows anyone with relatively modest technical skills to produce convincing fake videos or images that can depict people saying or doing things they never actually did. This capability raises serious concerns about misinformation, defamation, harassment, and exploitation. For example, deepfakes have been used to create non-consensual sexual imagery, often targeting women and public figures, which can cause severe reputational and emotional harm. Such misuse has prompted states like Michigan and New Jersey to enact laws criminalizing the creation and distribution of harmful deepfake content, especially involving sexual situations or political manipulation.

Legal battles around deepfakes will likely be unprecedented because they touch on multiple, often conflicting, legal principles:

1. **Intent and Knowledge**: Prosecutors must prove that the accused knowingly created or distributed deepfake content with harmful intent, such as harassment or fraud. This requires sophisticated digital forensics to trace the origin of the content and link it to the perpetrator. Defendants may argue lack of intent, mistaken identity, or that their use falls under protected speech like satire or parody.

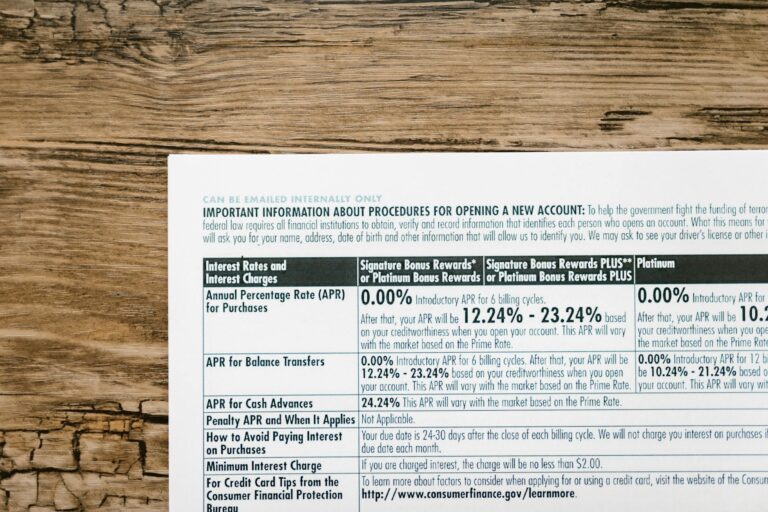

2. **Privacy and Consent**: Deepfakes often violate individuals’ privacy by depicting them in fabricated scenarios without consent. Laws like the “TAKE IT DOWN Act” require platforms to remove non-consensual intimate images within strict timeframes, imposing new responsibilities on social media and hosting sites. Victims can pursue both criminal charges and civil lawsuits for damages, creating a dual legal front.

3. **Platform Liability and Regulation**: Online platforms face mounting pressure to police deepfake content proactively. Attorneys general from multiple states have urged search engines and payment processors to block the spread and monetization of deepfake non-consensual intimate imagery. This raises complex questions about the extent of platform liability, free speech protections, and the technical feasibility of content moderation at scale.

4. **Technological Challenges in Evidence and Detection**: Courts will grapple with the reliability of AI detection tools used to identify deepfakes. Defense teams may challenge the accuracy of these tools, arguing that false positives could lead to wrongful accusations. The evolving nature of AI means legal standards for digital evidence must adapt rapidly.

5. **Free