YouTube could indeed face new lawsuits related to child exploitation content, as concerns about the platform’s handling of children’s safety and privacy continue to grow. Over recent years, YouTube has been under intense scrutiny for how it manages videos aimed at or involving children, especially regarding the protection of their personal data and exposure to harmful content. This scrutiny has led to significant legal actions and settlements, and the landscape suggests more lawsuits could be on the horizon.

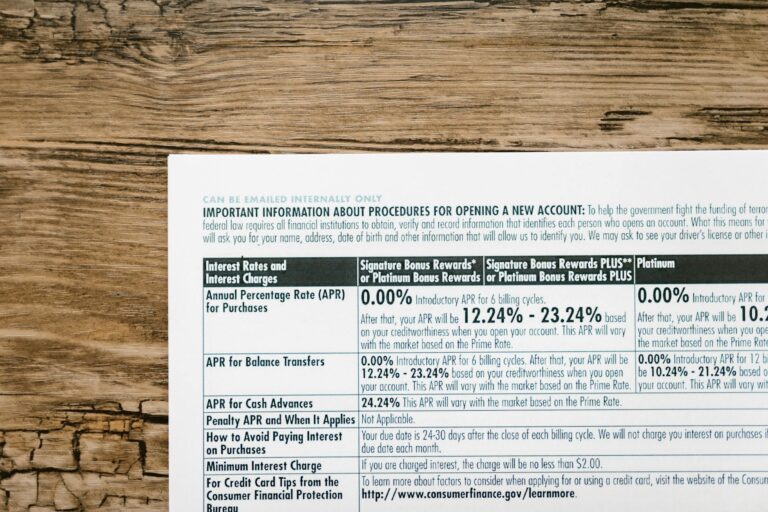

One major issue revolves around YouTube’s compliance with the Children’s Online Privacy Protection Act (COPPA), a federal law designed to protect children under 13 by requiring parental consent before collecting their personal information. YouTube has faced lawsuits alleging that it collected data from children without proper consent, enabling targeted advertising that violates COPPA. For example, Google and YouTube agreed to pay $30 million in 2025 to settle a class action lawsuit claiming such violations. This settlement followed a previous $170 million penalty in 2019 for similar issues, showing a pattern of legal challenges tied to children’s privacy on the platform.

Another related concern is the mislabeling of videos as “Made for Kids” (MFK) or “Not Made for Kids” (NMFK). This designation affects how YouTube treats videos in terms of data collection and advertising restrictions. Disney, for instance, was fined $10 million for failing to properly label some of its YouTube videos featuring popular children’s content like Frozen and Toy Story. This mislabeling allegedly allowed the unlawful collection of children’s personal data and exposed young viewers to inappropriate YouTube features. The settlement required Disney to implement a program to review video designations and comply strictly with COPPA rules.

Beyond privacy, there is a growing wave of lawsuits targeting social media and video platforms, including YouTube, for their role in exposing children and teens to harmful content and addictive algorithms. Families and advocacy groups have filed lawsuits alleging that platforms intentionally design features that exploit young users’ vulnerabilities, leading to mental health issues such as anxiety, depression, eating disorders, and even suicidal thoughts. These lawsuits argue that companies like YouTube have a responsibility to prevent harm caused by addictive content and to warn users about potential risks.

In addition to privacy and mental health concerns, there are also emerging legal actions specifically addressing child exploitation content. For example, a family in North Carolina filed a lawsuit against Roblox, a platform similar to YouTube in terms of user-generated content, alleging child sexploitation. While this lawsuit is not directly against You