**Are Censorship Algorithms Grounds for Consumer Lawsuits?**

Censorship algorithms—automated systems used by platforms to moderate, filter, or suppress content—have become a central feature of the digital landscape. These algorithms decide what content users see or don’t see, often without transparent explanations. The question arises: can consumers sue companies over the use of these censorship algorithms? The answer is complex and depends on legal, technical, and ethical considerations.

At its core, censorship algorithms are tools designed to enforce platform policies, community guidelines, or legal requirements. They operate by scanning user-generated content for violations such as hate speech, misinformation, explicit material, or other prohibited content. When content is flagged, it may be removed, hidden, or demoted in visibility. This process is often opaque, leading to accusations of unfair censorship or bias.

**Legal Grounds for Consumer Lawsuits**

For consumers to successfully sue over censorship algorithms, they generally need to establish that the platform’s actions caused them harm and that those actions were unlawful or breached a contract. Several legal theories might be invoked:

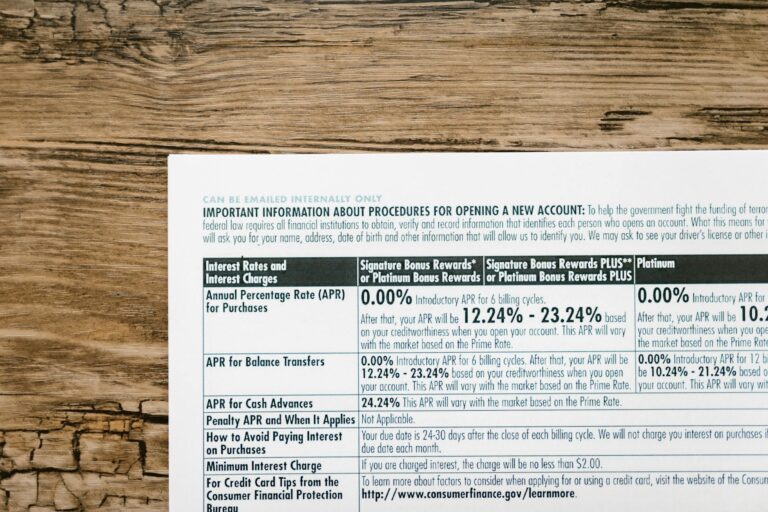

– **Breach of Contract or Terms of Service:** Users agree to platform terms, which often include clauses about content moderation. If a platform violates its own stated policies or applies algorithms inconsistently, users might claim breach of contract. However, terms often grant platforms broad discretion, making this difficult.

– **Violation of Free Speech Rights:** In many countries, free speech protections limit government censorship but do not apply to private companies. Platforms are private entities and can moderate content as they see fit, so free speech claims usually do not hold against them.

– **Consumer Protection Laws:** If censorship algorithms mislead consumers or unfairly restrict access to services, users might argue violations of consumer protection statutes. For example, if a platform’s algorithm suppresses content without clear reasons or transparency, this could be seen as deceptive or unfair.

– **Discrimination Claims:** If algorithms disproportionately censor content from certain groups (e.g., marginalized communities), lawsuits might allege discrimination under civil rights laws. Proving algorithmic bias and causation is challenging but increasingly a focus of legal scrutiny.

– **Defamation or Reputation Harm:** Users whose content is wrongly censored might claim reputational damage, but this is difficult to prove unless the platform’s actions are malicious or negligent.

**Challenges in Suing Over Censorship Algorithms**

Several factors complicate consumer lawsuits:

– **Algorithmic Opacity:** Platforms rarely disclose how their algorithm