The use of artificial intelligence (AI) in public healthcare oversight has become increasingly controversial. While AI is touted as a tool to streamline processes and improve efficiency, its application in healthcare decisions has raised significant concerns about fairness, accuracy, and patient care.

In the United States, health insurers are facing scrutiny for using AI in claim decisions. This has led to allegations that AI systems are being used to deny claims without adequate human oversight, resulting in wrongful denials and delayed care. For instance, UnitedHealth Group has faced legal challenges over its use of AI to deny claims, with critics arguing that such systems overlook clinical nuances and prioritize cost savings over patient needs.

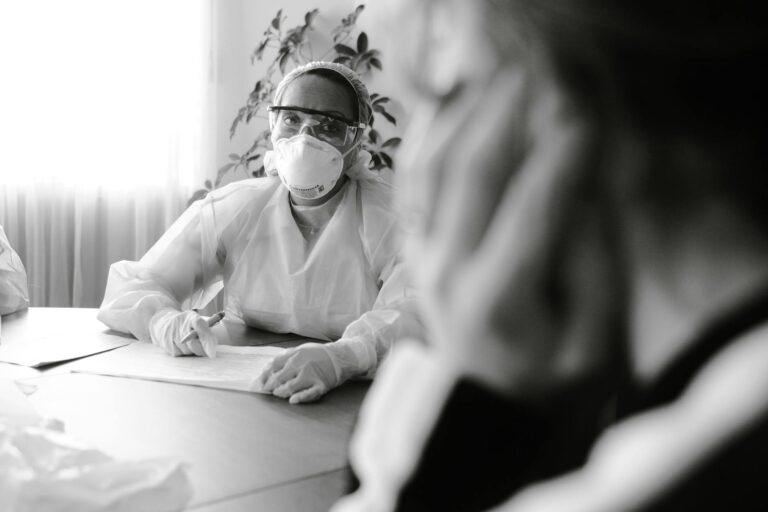

One of the primary concerns is that AI systems lack the ability to understand complex medical situations as well as human healthcare providers do. This can lead to decisions that are not in the best interest of the patient. Furthermore, there is a growing distrust among the public regarding the use of AI in healthcare. A recent national study found that more than 65% of adults expressed low confidence in their healthcare system’s ability to implement AI responsibly.

Regulatory bodies are beginning to take action. In California, the Physicians Make Decisions Act ensures that medical decisions are made by licensed healthcare providers, not solely by AI algorithms. This legislation sets a precedent for other states to follow, emphasizing the need for human oversight in healthcare decisions.

Legal battles are also underway. In Minnesota, a class-action lawsuit against UnitedHealth Group alleges that the insurer’s reliance on AI to deny claims constitutes a breach of contract and bad faith. This case highlights the legal risks insurers face when using AI without adequate human oversight.

The controversy surrounding AI in healthcare is not limited to insurance claims. The broader use of AI in administrative functions, such as billing automation and patient scheduling, also raises concerns about digital disparities. Healthcare systems with greater resources are better positioned to evaluate and refine AI applications, while underfunded institutions may struggle to integrate these technologies effectively.

To ensure that AI fulfills its potential in healthcare, rigorous testing, monitoring, and recalibration are necessary to eliminate bias and enhance reliability. Just as new pharmaceuticals undergo extensive clinical trials before widespread adoption, AI-driven medical tools must be subjected to stringent validation processes. Only through robust oversight and transparency can AI earn the trust of patients and providers alike.

In conclusion, while AI holds significant promise in healthcare, its controversial use in public healthcare oversight underscores the need for careful consideration and regulation. Ensuring that AI tools augment, rather than replace, human judgment is crucial for maintaining trust and ensuring patient access to necessary care. The future of healthcare depends on striking a balance between technological efficiency and human oversight.